When Memory Decides It’s Tired of Being a Librarian and Starts Doing the Math

So This “New Italian Chip” Thing… What Is Actually Going On Here?

Alright. Pour a drink. Or don’t. But mentally, at least, loosen the tie.

Because this story sounds like one of those breathless “scientists reinvent computing” headlines — and it kind of is — but only if you understand why modern computers are such spectacular energy hogs in the first place.

Every computer you’ve ever used is basically playing a very dumb game of fetch. Memory holds the data. The processor does the thinking. And the data spends its entire life being shuttled back and forth like an intern with a USB stick who never gets to sit down. That back-and-forth is expensive. Not metaphorically. Electrically. Thermally. Financially. Especially at data-center scale.

This Italian research team looked at that mess and said, more or less: “Why are we moving the data at all?” And then they did something mildly unhinged by modern standards — they let the memory do the computing.

Not metaphorically. Literally.

Instead of ones and zeros marching in neat digital lines, this chip uses analog electrical behavior — voltages, currents, resistance — the same stuff engineers are usually paid to suppress. Physics does the math whether you like it or not, so the trick is to aim it in the right direction and let it settle into the answer.

This isn’t nostalgia for the 1950s. It’s weaponized pragmatism.

The chip itself isn’t a general-purpose brain. It doesn’t run Windows. It doesn’t care about your browser tabs. It solves specific classes of problems — linear and non-linear systems — which just so happen to be the exact mathematical backbone of modern AI, signal processing, and wireless systems.

And because the computation happens inside the memory arrays, the usual energy drain from moving data around largely disappears. Less traffic. Less heat. Less power. Shorter latency. Smaller silicon footprint. The whole thing feels like cheating, except it’s allowed by physics.

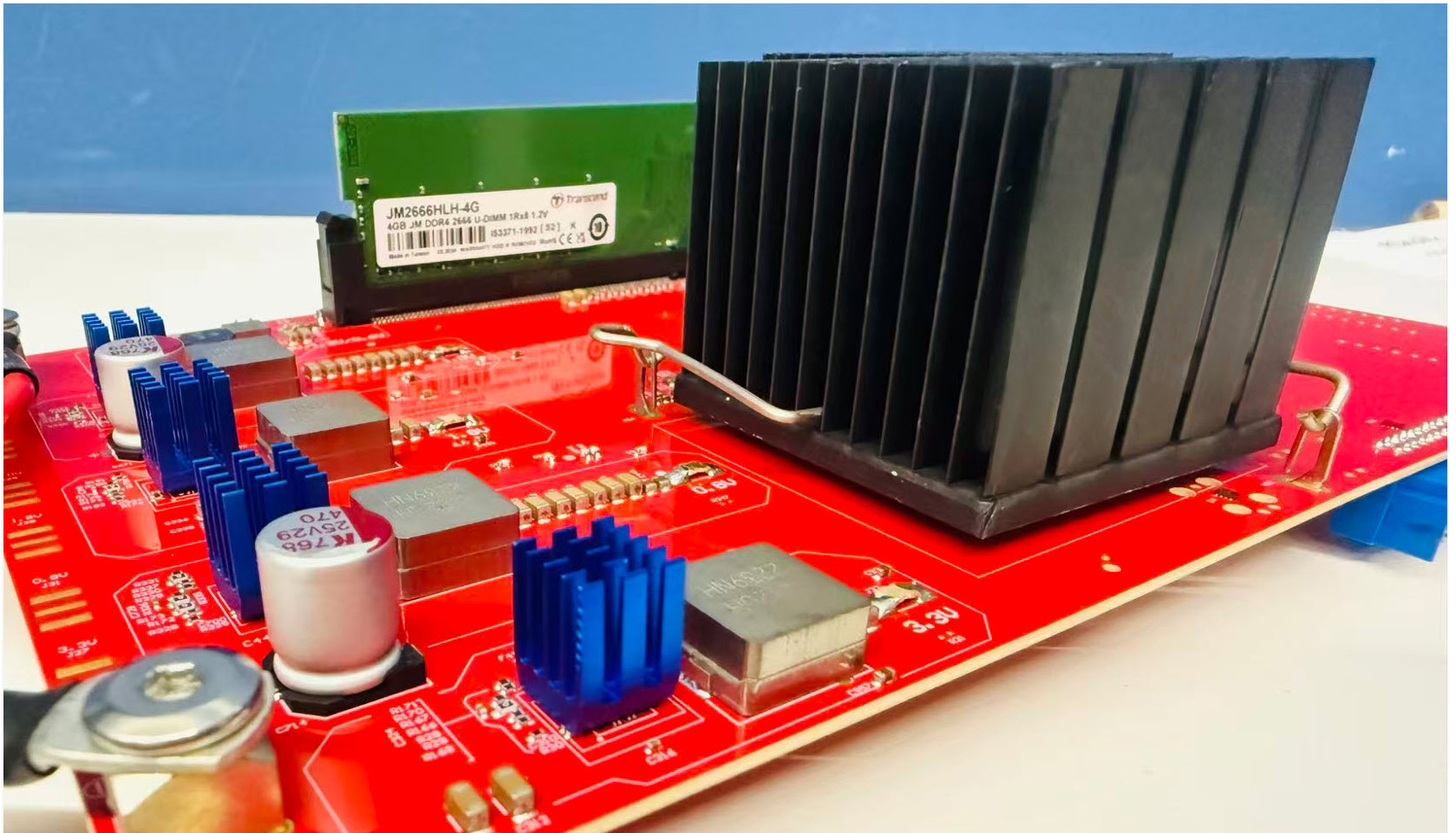

Now here’s the important part: this isn’t a thought experiment. It’s not a whiteboard fantasy. They built the chip using standard CMOS manufacturing. The boring, industrial, highly optimized process that already makes everything else. That’s why this matters. Exotic ideas are cheap. Manufacturable ones are rare.

The energy numbers sound insane — orders of magnitude lower than digital systems — and yes, there are asterisks. This only applies to certain workloads. You’re not replacing CPUs. You’re not killing GPUs. You’re carving out a new lane where brute-force digital logic is simply the wrong tool.

And that lane is getting crowded fast.

- AI inference and training workloads that are mostly matrix math

- Data centers where energy costs are now a line item executives actually fear

- Robotics and navigation systems that can’t afford cloud latency

- Future wireless systems (5G, 6G, whatever comes after the buzzwords fade)

This also explains why analog computing keeps “coming back.” It never really failed — it was just inconvenient when digital scaling was cheap. Now scaling is hard, power is expensive, and heat is everyone’s problem. Suddenly the old physics tricks look a lot smarter.

So is this brand-new news? Yes. This specific chip is fresh. But the idea behind it has been quietly fermenting for years, waiting for manufacturing, AI demand, and energy economics to collide at the same moment.

We’re watching that collision now.

Bottom line, slightly buzzed but still sincere: this isn’t a revolution that replaces your computer. It’s a pressure valve. A way out of a corner the industry painted itself into by assuming faster clocks and wider buses would save us forever.

Turns out sometimes the smartest move is to stop moving things around and let the electrons finish the job themselves.

Tags: AI accelerators, analog computing, data center hardware, energy-efficient chips, in-memory computing